Self-Driving Cars In Minnesota: Are They Dangerous?

It’s a tantalizing vision: Grab a cup of coffee and your iPad, hop in your driverless car and be whisked to work while catching up on the latest headlines, emails or YouTube cat video!

Not so farfetched say those who are following the latest in robot car technologies, with some predicting fully autonomous vehicles by 2020. Nearly every automaker you have ever heard of is currently hard at work creating their own self-driving vehicle. Many others, like tech giant Google and ride sharing companies like Uber, are also trying to get in on the action.

But hold on there George Jetson fans! A lot has to happen before this vision becomes a reality, starting with the serious concerns we have about the safety and legal rights of riders who put their fate in the hands of a robot driver. So let’s start this conversation with a look at where self-driving technology is now as well as the many serious challenges still facing regulators, safety advocates and the driving public.

Explaining Autonomous Driving Levels

Self-driving technology has been around for a while, from cruise control in the 1950s to electronic stability in the mid-1990s to automatic braking, lane departure warnings and self-parking today.

Because there are cars and trucks with varying technologies all sharing the road at once, engineers use a six-level ranking system:

Levels 0, 1 and 2

Level 0 cars have no self-driving technology. Levels 1 (driver assistance) and 2 (partial automation) provide some level of automation, like adaptive cruise control or lane departure alerts, but still require drivers to monitor the environment.

Levels 3, 4 and 5

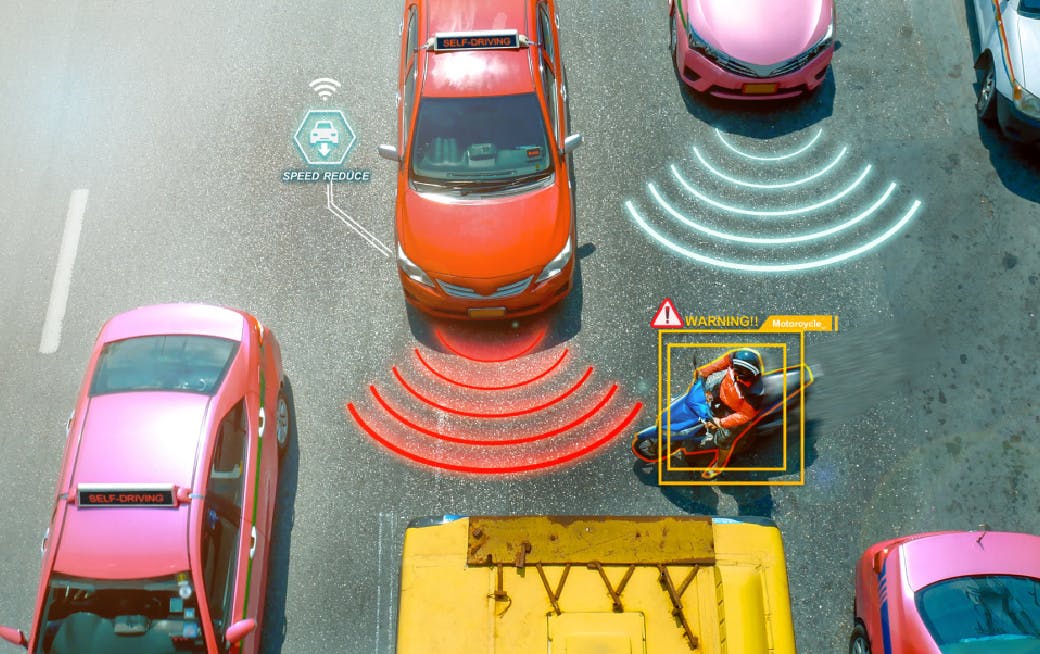

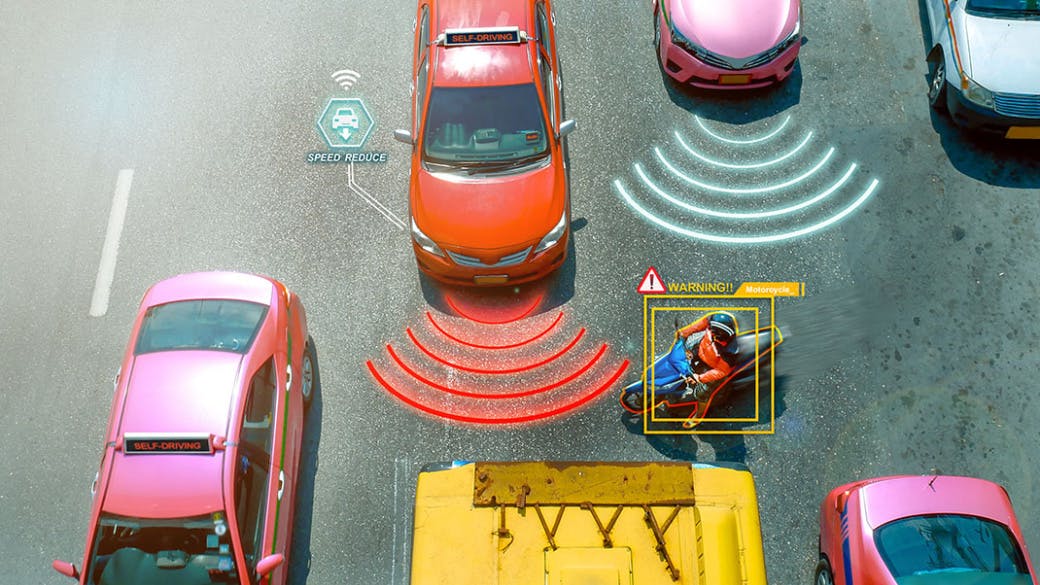

Level 3 vehicles (conditional automation) deploy technology that monitors the driving environment with cameras, radar and LIDAR – high-tech sensors that detect light. Only level 3 vehicles are commercially available today, including the Tesla with Autopilot or the Mercedes-Benz with Drive Pilot. But traditional car makers, along with Google and Uber, are testing fully-automated level 5 vehicles today and predict commercial sales by 2020.

Injury Accidents Heighten Concerns About Self-Driving Cars In Minnesota, Elsewhere

Well-publicized crashes involving self-driving vehicles have sparked concern among public officials and legal action by drivers and their families.

A woman was recently struck and killed by a self-driving vehicle in Arizona. The self-driving car was operated by Uber, and the woman was walking in a crosswalk at the time. While there was a person behind the wheel, the crash did occur while the vehicle was in autonomous mode. This led to Uber suspending its testing of self-driving vehicles in Tempe, San Francisco and Pittsburgh.

Currently, this is the only pedestrian death caused by an autonomous vehicle, but it is not the only fatality. Tesla has had two fatal crashes where the self-driving mode was engaged. One occurred in March 2018 when a Model X SUV hit a concrete highway and burst into flames, killing the driver. The first fatal crash involving a self-driving car occurred in 2016 in Florida. The Model S crashed into a truck that crossed the car’s path, and neither the driver nor the car computer saw the white vehicle due to a very bright sky.

After the fatal accident in Arizona, legislators here in Minnesota are considering legislation that would ban driverless vehicles from the road. This ban would be in place until the vehicles are proven safe. Four state senators are supporting the proposal, including state Sen. Jim Abeler, who is eager to start the debate but does not expect the legislation to pass in 2018.

This effort comes after Minnesota has historically supported driverless technology, allowing testing for both private vehicles and driverless public transport. In March, for instance, Transportation Commissioner Charlie Zelle was optimistic, suggesting Minnesota should be a pilot state for testing self-driving technology. To that end, a 15-member group of state officials will tackle the questions presented by autonomous vehicles, covering everything from traffic regulations to privacy concerns.

Safety and Liability for Self-Driving Cars

Tesla maintains that its Autopilot is a driver assistance tool, which is a far cry from suggesting the car can actually drive itself. After the high-profile crashes cited above, Tesla developed an elaborate and extensive system to ensure that drivers keep their attention on the road even when the self-driving function is engaged. If a driver’s hands leave the wheel for more than a few seconds, a visual warning appears on the dashboard. If the driver continues to ignore these warnings, the system turns on the flashers and brings the car to a safe stop.

However, experts suggest that self-driving systems like the Tesla Autopilot can give users a false sense of security and, indeed, safety. If you can feel the steering wheel moving underneath your fingertips for hours without issue, your attention is likely to wander, making it very difficult to quickly take control if there is an emergency. We also know that auto makers have a long history of protecting their profits by denying and covering up responsibility for manufacturing defects. Given all the highly advanced, untested technology required for a truly-autonomous vehicle, defects are likely.

Unfortunately, the pattern by automakers so far has been to blame humans first when there is a crash involving self-driving technologies. We believe that as more and more cars become completely autonomous, it makes less and less sense for human drivers to be held liable. The best solution should be to follow the legal doctrine called strict liability, which means that manufacturers take full responsibility for crashes when the robot system is driving. Common carriers like bus companies, airlines or train operators already operate under strict liability as passengers are completely dependent on the carrier for their safety.

By taking human error out of the driving equation with self-driving cars, the potential for safer roads and fewer injuries here in Minnesota and elsewhere around the nation is significant. In the meantime, we must move ahead cautiously and consider the many unsolved safety, regulatory and legal issues presented by the rise of autonomous vehicles.

Needless to say, if you are involved in a collision with a self-driving car at any level of automation, you will need experienced legal representation. Click here to learn more about key considerations in any auto accident injury case.